Accelerate large AI model training securely with HPE ProLiant Compute XD685 and NVIDIA

Power demanding AI workloads cost efficiently and securely with HPE ProLiant Compute XD680

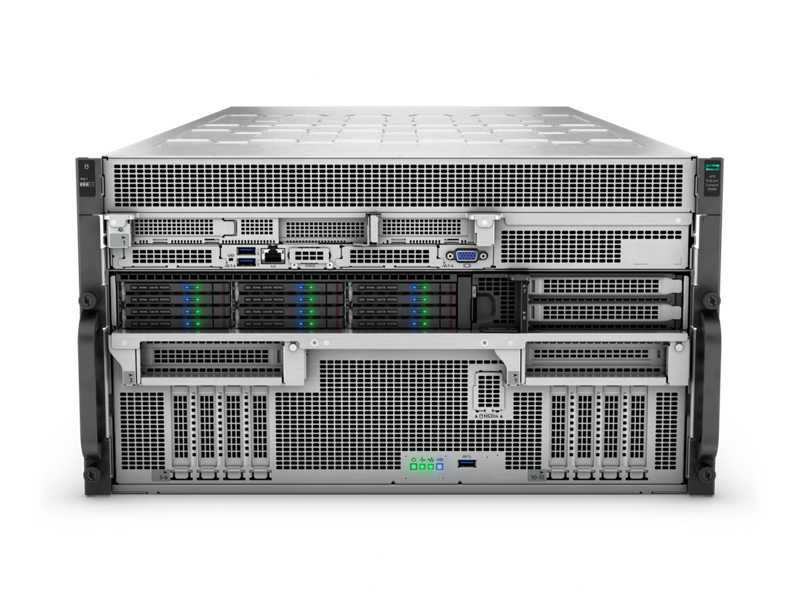

HPE ProLiant Compute XD685 Air Cooling Server

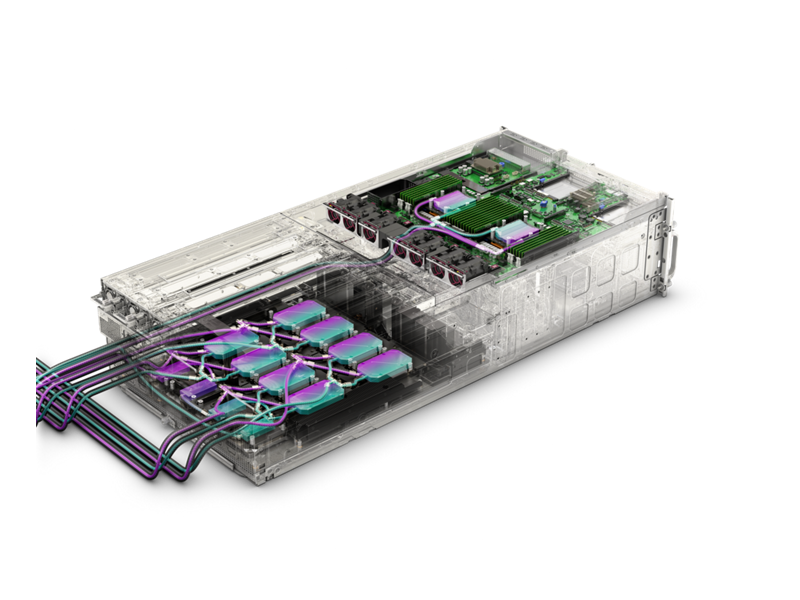

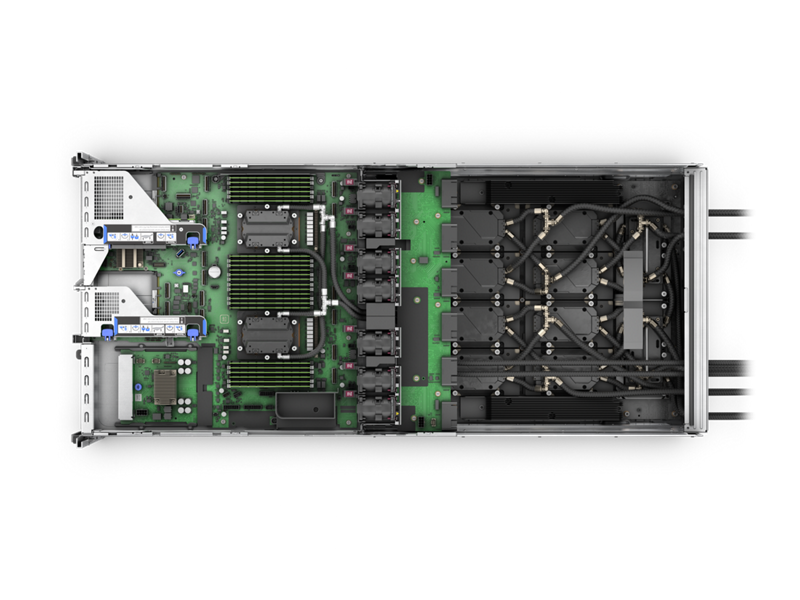

Do you need a highly performant, secure and energy-efficient AI cluster? The HPE ProLiant Compute XD685 leverages a purpose-built architecture and is designed to accelerate large, complex AI model training and tuning workloads. Powered by eight of the latest accelerators from NVIDIA® or AMD, this server is optimized for use in large language model training, natural language processing, and multimodal training. Optional direct liquid cooling (DLC) helps organizations meet escalating power requirements, advance sustainability goals, and lower operational costs. Managed by HPE iLO, the server offers seamless management and robust security. HPE Services provides expertise for global installation and quick deployment of large AI clusters, offering stability and operational excellence.

SKU # S4Q28A

Contact Us

Chat with us hpestoresupport@hpe.comMaximize your HPE ProLiant Compute XD685

What's New

- Support for eight NVIDIA H200 SXM Tensor Core GPUs air-cooled or liquid-cooled, or eight NVIDIA Blackwell GPUs liquid-cooled, or eight AMD Instinct™ MI300X air-cooled

- Modular chassis designed by Hewlett Packard Enterprise. 5U for liquid-cooled and 6U for air-cooled configurations.

- 24x DDR5 6400 RDIMMs and 12 channels per CPU DDR5 w/ ECC

- Built-in security features with HPE iLO 6

- Seamless cluster management with HPE Performance Cluster Manager (HPCM)

- 2x 5th Generation AMD EPYC™ processors

The HPE ProLiant Compute XD685 provides a choice of eight NVIDIA H200 Tensor Core or NVIDIA Blackwell GPUs or eight AMD Instinct™ MI300X accelerators, delivering leading AI performance for training and tuning tasks.

Show More {"title":"Speed and Flexibility to Increase your Competitive Advantage","keyList":["The HPE ProLiant Compute XD685 provides a choice of eight NVIDIA H200 Tensor Core or NVIDIA Blackwell GPUs or eight AMD Instinct™ MI300X accelerators, delivering leading AI performance for training and tuning tasks.","In liquid-cooled configurations, a 5U chassis designed by HPE helps you optimize pod deployment, leveraging a compact 8-node-per-rack arrangement.","HPE Performance Cluster Manager expedites initial cluster setup and software deployment, and provides continued resilience and reliability.","HPE Factory Express Services builds, integrates, validates, fully tests, and customizes your solution in the factory, facilitating quicker on-site deployment.","You get flexibility and agility with a customized IT environment that lets you run the right workload at the right place, at the right time. Whether you want a solution on-premises, or deploy a hybrid model, we offer flexible consumption and deployment models to suit your requirements and goals."]}

AMD is a trademark of Advanced Micro Devices, Inc. ENERGY STAR is a registered mark owned by the U.S. government. NVIDIA is a trademark and/or registered trademark of NVIDIA Corporation in the U.S. and other countries. All third-party marks are property of their respective owners.